Visual Studio Code is free and available on your favorite platform - Linux, macOS, and Windows. Download Visual Studio Code to experience a redefined code editor, optimized for building and debugging modern web and cloud applications. May 10, 2016 In the Server Properties under the Connections Options, you need to check the box of Allow remote connections to this server and then click on Ok button. Allow SQL Server in Firewall Settings: You need to add a Windows Firewall exception on the server for SQL TCP ports 1433 and 1434, so that SQL Server will run. Visual Studio 2019 enables you to build console applications and ASP.NET applications that target macOS. However, debugging is not supported. For additional macOS development tools choices, try Visual Studio Code or Visual Studio for Mac. Visual Studio Code provides a streamlined, extensible developer tool experience for macOS.

-->Databricks Connect allows you to connect your favorite IDE (IntelliJ, Eclipse, PyCharm, RStudio, Visual Studio), notebook server (Zeppelin, Jupyter), and other custom applications to Azure Databricks clusters.

This article explains how Databricks Connect works, walks you through the steps to get started with Databricks Connect, explains how to troubleshoot issues that may arise when using Databricks Connect, and differences between running using Databricks Connect versus running in an Azure Databricks notebook.

Overview

Databricks Connect is a client library for Databricks Runtime. It allows you to write jobs using Spark APIs and run them remotely on an Azure Databricks cluster instead of in the local Spark session.

For example, when you run the DataFrame command spark.read.parquet(...).groupBy(...).agg(...).show() using Databricks Connect, the parsing and planning of the job runs on your local machine. Then, the logical representation of the job is sent to the Spark server running in Azure Databricks for execution in the cluster.

With Databricks Connect, you can:

- Run large-scale Spark jobs from any Python, Java, Scala, or R application. Anywhere you can

import pyspark,import org.apache.spark, orrequire(SparkR), you can now run Spark jobs directly from your application, without needing to install any IDE plugins or use Spark submission scripts. - Step through and debug code in your IDE even when working with a remote cluster.

- Iterate quickly when developing libraries. You do not need to restart the cluster after changing Python or Java library dependencies in Databricks Connect, because each client session is isolated from each other in the cluster.

- Shut down idle clusters without losing work. Because the client application is decoupled from the cluster, it is unaffected by cluster restarts or upgrades, which would normally cause you to lose all the variables, RDDs, and DataFrame objects defined in a notebook.

Requirements

Only the following Databricks Runtime versions are supported:

- Databricks Runtime 7.3 LTS ML, Databricks Runtime 7.3 LTS

- Databricks Runtime 7.1 ML, Databricks Runtime 7.1

- Databricks Runtime 6.4 ML, Databricks Runtime 6.4

- Databricks Runtime 5.5 LTS ML, Databricks Runtime 5.5 LTS

The minor version of your client Python installation must be the same as the minor Python version of your Azure Databricks cluster. The table shows the Python version installed with each Databricks Runtime.

Databricks Runtime version Python version 7.3 LTS ML, 7.3 LTS 3.7 7.1 ML, 7.1 3.7 6.4 ML, 6.4 3.7 5.5 LTS ML 3.6 5.5 LTS 3.5 For example, if you’re using Conda on your local development environment and your cluster is running Python 3.5, you must create an environment with that version, for example:

The Databricks Connect major and minor package version must always match your Databricks Runtime version. Databricks recommends that you always use the most recent package of Databricks Connect that matches your Databricks Runtime version. For example, when using a Databricks Runtime 7.3 LTS cluster, use the

databricks-connect7.3.*package.Note

See the Databricks Connect release notes for a list of available Databricks Connect releases and maintenance updates.

Java Runtime Environment (JRE) 8. The client does not support JRE 11.

Set up client

Step 1: Install the client

Uninstall PySpark. This is required because the

databricks-connectpackage conflicts with PySpark. For details, see Conflicting PySpark installations.Install the Databricks Connect client.

Note

Always specify

databricks-connectX.Y.*instead ofdatabricks-connect=X.Y, to make sure that the newest package is installed.

Step 2: Configure connection properties

Collect the following configuration properties:

Azure Databricks workspace URL.

Azure Databricks personal access token or an Azure Active Directory token.

- For Azure Data Lake Storage (ADLS) credential passthrough, you must use an Azure Active Directory token. Azure Active Directory credential passthrough is supported only on Standard clusters running Databricks Runtime 7.3 LTS and above, and is not compatible with service principal authentication.

- For more information about Azure Active Directory token refresh requirements, see Refresh tokens for Azure Active Directory passthrough.

The ID of the cluster you created. You can obtain the cluster ID from the URL. Here the cluster ID is

1108-201635-xxxxxxxx.The unique organization ID for your workspace. See Get workspace, cluster, notebook, model, and job identifiers.

The port that Databricks Connect connects to. The default port is

15001. If your cluster is configured to use a different port, such as8787which was given in previous instructions for Azure Databricks, use the configured port number.

Configure the connection. You can use the CLI, SQL configs, or environment variables. The precedence of configuration methods from highest to lowest is: SQL config keys, CLI, and environment variables.

- CLI

Run

databricks-connect.The license displays:

Accept the license and supply configuration values. For Databricks Host and Databricks Token, enter the workspace URL and the personal access token you noted in Step 1.

If you get a message that the Azure Active Directory token is too long, you can leave the Databricks Token field empty and manually enter the token in

~/.databricks-connect.SQL configs or environment variables. The following table shows the SQL config keys and the environment variables that correspond to the configuration properties you noted in Step 1. To set a SQL config key, use

sql('set config=value'). For example:sql('set spark.databricks.service.clusterId=0304-201045-abcdefgh').Parameter SQL config key Environment variable name Databricks Host spark.databricks.service.address DATABRICKS_ADDRESS Databricks Token spark.databricks.service.token DATABRICKS_API_TOKEN Cluster ID spark.databricks.service.clusterId DATABRICKS_CLUSTER_ID Org ID spark.databricks.service.orgId DATABRICKS_ORG_ID Port spark.databricks.service.port DATABRICKS_PORT Important

Databricks does not recommend putting tokens in SQL configurations.

- CLI

Test connectivity to Azure Databricks.

If the cluster you configured is not running, the test starts the cluster which will remain running until its configured autotermination time. The output should be something like:

Set up your IDE or notebook server

The section describes how to configure your preferred IDE or notebook server to use the Databricks Connect client.

In this section:

Jupyter

The Databricks Connect configuration script automatically adds the package to your project configuration. To get started in a Python kernel, run:

To enable the %sql shorthand for running and visualizing SQL queries, use the following snippet:

PyCharm

The Databricks Connect configuration script automatically adds the package to your project configuration.

Python 3 clusters

When you create a PyCharm project, select Existing Interpreter. From the drop-down menu, select the Conda environment you created (see Requirements).

Go to Run > Edit Configurations.

Add

PYSPARK_PYTHON=python3as an environment variable.

SparkR and RStudio Desktop

Download and unpack the open source Spark onto your local machine. Choose the same version as in your Azure Databricks cluster (Hadoop 2.7).

Run

databricks-connect get-jar-dir. This command returns a path like/usr/local/lib/python3.5/dist-packages/pyspark/jars. Copy the file path of one directory above the JAR directory file path, for example,/usr/local/lib/python3.5/dist-packages/pyspark, which is theSPARK_HOMEdirectory.Configure the Spark lib path and Spark home by adding them to the top of your R script. Set

<spark-lib-path>to the directory where you unpacked the open source Spark package in step 1. Set<spark-home-path>to the Databricks Connect directory from step 2.Initiate a Spark session and start running SparkR commands.

sparklyr and RStudio Desktop

Note

You can copy sparklyr-dependent code that you’ve developed locally using Databricks Connect and run it in an Azure Databricks notebook or hosted RStudio Server in your Azure Databricks workspace with minimal or no code changes.

In this section:

Requirements

- sparklyr 1.2 or above.

- Databricks Runtime 6.4 or above with matching Databricks Connect.

Install, configure, and use sparklyr

In RStudio Desktop, install sparklyr 1.2 or above from CRAN or install the latest master version from GitHub.

Activate the Python environment with Databricks Connect installed and run the following command in the terminal to get the

<spark-home-path>:Initiate a Spark session and start running sparklyr commands.

Close the connection.

Resources

For more information, see the sparklyr GitHub README.

For code examples, see sparklyr.

sparklyr and RStudio Desktop limitations

The following features are unsupported:

- sparklyr streaming APIs

- sparklyr ML APIs

- broom APIs

- csv_file serialization mode

- spark submit

IntelliJ (Scala or Java)

Run

databricks-connect get-jar-dir.Point the dependencies to the directory returned from the command. Go to File > Project Structure > Modules > Dependencies > ‘+’ sign > JARs or Directories.

To avoid conflicts, we strongly recommend removing any other Spark installations from your classpath. If this is not possible, make sure that the JARs you add are at the front of the classpath. In particular, they must be ahead of any other installed version of Spark (otherwise you will either use one of those other Spark versions and run locally or throw a

ClassDefNotFoundError).Check the setting of the breakout option in IntelliJ. The default is All and will cause network timeouts if you set breakpoints for debugging. Set it to Thread to avoid stopping the background network threads.

Eclipse

Run

databricks-connect get-jar-dir.Point the external JARs configuration to the directory returned from the command. Go to Project menu > Properties > Java Build Path > Libraries > Add External Jars.

To avoid conflicts, we strongly recommend removing any other Spark installations from your classpath. If this is not possible, make sure that the JARs you add are at the front of the classpath. In particular, they must be ahead of any other installed version of Spark (otherwise you will either use one of those other Spark versions and run locally or throw a

ClassDefNotFoundError).

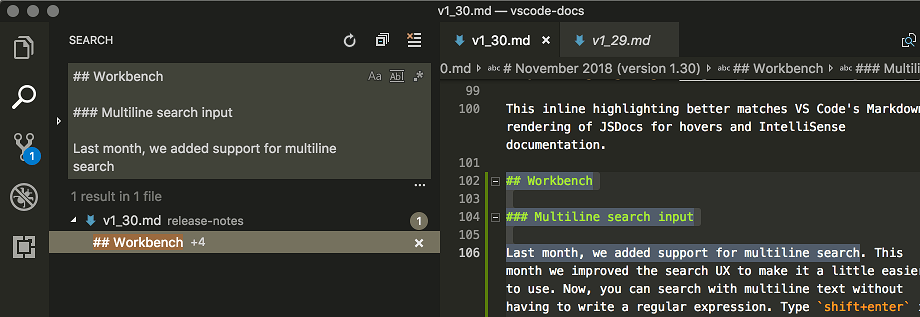

Visual Studio Code

Verify that the Python extension is installed.

Open the the Command Palette (Command+Shift+P on macOS and Ctrl+Shift+P on Windows/Linux).

Select a Python interpreter. Go to Code > Preferences > Settings, and choose python settings.

Run

databricks-connect get-jar-dir.Add the directory returned from the command to the User Settings JSON under

python.venvPath. This should be added to the Python Configuration.Disable the linter. Click the … on the right side and edit json settings. The modified settings are as follows:

If running with a virtual environment, which is the recommended way to develop for Python in VS Code, in the Command Palette type

select python interpreterand point to your environment that matches your cluster Python version.For example, if your cluster is Python 3.5, your local environment should be Python 3.5.

SBT

To use SBT, you must configure your build.sbt file to link against the Databricks Connect JARs instead of the usual Spark library dependency. You do this with the unmanagedBase directive in the following example build file, which assumes a Scala app that has a com.example.Test main object:

build.sbt

Run examples from your IDE

Java

Python

Scala

Work with dependencies

Typically your main class or Python file will have other dependency JARs and files. You can add such dependency JARs and files by calling sparkContext.addJar('path-to-the-jar') or sparkContext.addPyFile('path-to-the-file'). You can also add Egg files and zip files with the addPyFile() interface. Every time you run the code in your IDE, the dependency JARs and files are installed on the cluster.

Python

Python + Java UDFs

Scala

Access DBUtils

To access dbutils.fs and dbutils.secrets, you use the Databricks Utilities module.

Python

When using Databricks Runtime 7.1 or below, to access the DBUtils module in a way that works both locally and in Azure Databricks clusters, use the following get_dbutils():

When using Databricks Runtime 7.3 LTS or above, use the following get_dbutils():

Scala

Enabling dbutils.secrets.get

Visual Studio Code Online

Due to security restrictions, calling dbutils.secrets.get requires obtaining a privileged authorization token from your workspace. This is different from your REST API token, and starts with dkea.... The first time you run dbutils.secrets.get, you are prompted with instructions on how to obtain a privileged token. You set the token with dbutils.secrets.setToken(token), and it remains valid for 48 hours.

Access the Hadoop filesystem

You can also access DBFS directly using the standard Hadoop filesystem interface:

Set Hadoop configurations

On the client you can set Hadoop configurations using the spark.conf.set API, which applies to SQL and DataFrame operations. Hadoop configurations set on the sparkContext must be set in the cluster configuration or using a notebook. This is because configurations set on sparkContext are not tied to user sessions but apply to the entire cluster.

Troubleshooting

Run databricks-connect test to check for connectivity issues. This section describes some common issues you may encounter and how to resolve them.

Python version mismatch

Check the Python version you are using locally has at least the same minor release as the version on the cluster (for example, 3.5.1 versus 3.5.2 is OK, 3.5 versus 3.6 is not).

If you have multiple Python versions installed locally, ensure that Databricks Connect is using the right one by setting the PYSPARK_PYTHON environment variable (for example, PYSPARK_PYTHON=python3).

Server not enabled

Ensure the cluster has the Spark server enabled with spark.databricks.service.server.enabled true. You should see the following lines in the driver log if it is:

Conflicting PySpark installations

The databricks-connect package conflicts with PySpark. Having both installed will cause errors when initializing the Spark context in Python. This can manifest in several ways, including “stream corrupted” or “class not found” errors. If you have PySpark installed in your Python environment, ensure it is uninstalled before installing databricks-connect. After uninstalling PySpark, make sure to fully re-install the Databricks Connect package:

Conflicting SPARK_HOME

If you have previously used Spark on your machine, your IDE may be configured to use one of those other versions of Spark rather than the Databricks Connect Spark. This can manifest in several ways, including “stream corrupted” or “class not found” errors. You can see which version of Spark is being used by checking the value of the SPARK_HOME environment variable:

Java

Python

Scala

Resolution

If SPARK_HOME is set to a version of Spark other than the one in the client, you should unset the SPARK_HOME variable and try again.

Check your IDE environment variable settings, your .bashrc, .zshrc, or .bash_profile file, and anywhere else environment variables might be set. You will most likely have to quit and restart your IDE to purge the old state, and you may even need to create a new project if the problem persists.

You should not need to set SPARK_HOME to a new value; unsetting it should be sufficient.

Conflicting or Missing PATH entry for binaries

It is possible your PATH is configured so that commands like spark-shell will be running some other previously installed binary instead of the one provided with Databricks Connect. This can cause databricks-connect test to fail. You should make sure either the Databricks Connect binaries take precedence, or remove the previously installed ones.

If you can’t run commands like spark-shell, it is also possible your PATH was not automatically set up by pip install and you’ll need to add the installation bin dir to your PATH manually. It’s possible to use Databricks Connect with IDEs even if this isn’t set up. However, the databricks-connect test command will not work.

Conflicting serialization settings on the cluster

If you see “stream corrupted” errors when running databricks-connect test, this may be due to incompatible cluster serialization configs. For example, setting the spark.io.compression.codec config can cause this issue. To resolve this issue, consider removing these configs from the cluster settings, or setting the configuration in the Databricks Connect client.

Cannot find winutils.exe on Windows

If you are using Databricks Connect on Windows and see:

Follow the instructions to configure the Hadoop path on Windows.

The filename, directory name, or volume label syntax is incorrect on Windows

If you are using Databricks Connect on Windows and see:

Either Java or Databricks Connect was installed into a directory with a space in your path. You can work around this by either installing into a directory path without spaces, or configuring your path using the short name form.

Refresh tokens for Azure Active Directory passthrough

Azure Active Directory passthrough uses two tokens: the Azure Active Directory access token to connect using Databricks Connect, and the ADLS passthrough token for the specific resource.

- When the Azure Active Directory access token expires, Databricks Connect fails with an

Invalid Tokenerror. To extend the lifetime of this token, attach a TokenLifetimePolicy to the Azure Active Directory authorization application used to acquire the access token. - You cannot extend the lifetime of ADLS passthrough tokens using Azure Active Directory token lifetime policies. As a consequence, if you send a command to the cluster that takes longer than an hour, it will fail if an ADLS resource is accessed after the one hour mark.

Limitations

The following Azure Databricks features and third-party platforms are unsupported:

- The following Databricks Utilities: library, notebook workflow, and widgets.

- Structured Streaming.

- Running arbitrary code that is not a part of a Spark job on the remote cluster.

- Native Scala, Python, and R APIs for Delta table operations (for example,

DeltaTable.forPath). However, the SQL API (spark.sql(...)) with Delta Lake operations and the Spark API (for example,spark.read.load) on Delta tables are both supported. - Apache Zeppelin 0.7.x and below.

- Azure Active Directory credential passthrough is supported only on Standard clusters running Databricks Runtime 7.3 LTS and above, and is not compatible with service principal authentication.

Like Visual Studio Code? Got a Chromebook capable of running Linux Apps? Here’s how to combine the two to get the best of both.

If you don’t fancy a step-by-step guide or a gallery of pretty screenshots, then the following basic instructions — which are correct for a cheap and cheerful Acer Chromebook as of 2020-10-13 — should suffice:

- Upgrade your Chromebook to the latest Chrome OS version

- Download the latest Debian VS Code package from the official release page

- Install the VS Code package by right-clicking on the downloaded

.debfile in the Chrome OS Files app and selecting “Install with Linux (Beta)” - Enjoy VS Code on your Chromebook!

Happy? Got what you were looking for in the time you were willing to invest? Fantastic! Now, join the rest of us for how we got there in the first place (and elaborate security considerations as well as admire some beautiful screenshots along the way)…

When it comes to productivity, convenience really is king for me! Over the past decades I’ve used numerous IDEs, tending to stick with what Worx for Me!™, until either my requirements could no longer be met or something better came along that evolved my workflow altogether.

When I first encountered Visual Studio Code (in short: VS Code), I was initially quite sceptical about yet another fully-customisable editor built in yet another language that had seen a massive spike in popularity over recent years.

But I was also quickly and gladly proven wrong by its value proposition. Over the past years, I’ve found myself slowly transitioning VS Code right into the centre of my productivity toolbox. By now, it’s thoroughly in the middle.

It’s simple. It’s (fairly) lightweight. It’s “batteries included” which means that it’s got most of the things I need right out of the box. And it’s got a million and one extensions for just about everything I need (or have not yet realised that I need them).

VS Code has become the de-facto editor of choice for me — for everything from open source development to writing blog posts in Markdown (yep, also this one).

When it comes to blogging (you’re looking at it), VS Code has supported me in evolving my workflow into a GitOps centric one where I can effectively and efficiently author “articles-as-code” using post2ghost. In short, it Worx for Me!™

So, when finally getting my hands on a cheap and cheerful Acer Chromebook with a full-size and comfortable keyboard, it sounded like the perfect blogging machine. It’s portable. It’s lightweight. And it’s got great battery life for something that has the weight of two tea bags and looks like it did shrink in the wash.

There was only one tiny problem. It didn’t have VS Code on it. Not out of the box. Not in the Chrome Web Store. And not in the Google Play Store either (side note: having Android apps running on Chrome OS is a really differentiating feature and opens the door widely into that giant universe).

It’s not that I was reluctant (some would say stubborn) to consider the switch to another editor. I did try a couple. And miserably failed after about an hour in.

They didn’t have what I needed. They were made for a slightly different purpose. They didn’t integrate with the ecosystem I came to rely upon. But most importantly, they didn’t provide the necessary tools to integrate with my existing workflow. Not good. Because

Regardless of the platform: I need the right tools, to get the job done!

So, the job at hand was fairly straightforward

How to install VS Code on a cheap and cheerful Acer Chromebook?!

At this point, it helps to know that Chromebooks run Chrome OS as their operating system. And with Chrome OS effectively being Linux under the bonnet with a shiny UI on top, the choices are actually plentiful when it comes to running Linux Apps, such as VS Code, on a Chromebook.

The list of general options ranges from

- Parting with Chrome OS entirely and installing another Linux distribution (such as Ubuntu) on the Chromebook, effectively only leveraging the hardware

- Enabling the root user in Chrome OS for unrestricted access

- Enabling developer mode in Chrome OS for local user level access

- Leveraging Android apps such as Termux for local user level access in a terminal emulator and Linux environment app

- Enabling the recently released Linux Apps (Beta) feature

Now, not all of the above options offer a parity experience when it comes to installing and subsequently working with Linux Apps. Some of them, especially the ones that forgo the Chrome OS ecosystem entirely or enable special users or modes offer greater flexibility. However

“With great power comes great responsibility.”— Spider-Man

And in the context of a Chromebook, this means that the newly gained flexibility can come at a steep price: a drastic impact on security when not properly attended to.

Inspired by a post about a USD 169 Developer Chromebook that discussed the security implications of several options in detail, the big security principles for the VS Code installation on my Chromebook became quite clear

- Chrome OS would remain as the single operating system of the Chromebook

- Privileged access would remain disabled

- No special modes or native tooling would be enabled that could potentially weaken the security posture of the Chromebook

- The VS Code installation would have to leverage native Chrome OS features without installing another Linux OS on the side, as

- Space is already scarce on the Chromebook and

- Any additional Linux installation would most likely

- Bring its own ecosystem

- Effectively require constant manual maintenance as support for it many not be natively integrated into Chrome OS

- Have an impact on battery life as it cannot leverage native OS support for resource intense applications like X-Server

- Stay within the current manufacturer supported bounds of Chrome OS

The above security principles effectively leave option 5 from the list of options at the start of this section as the only viable option. And that’s not necessarily a bad choice. All other options are basically side-stepping the manufacturer’s support and hence can make a Chromebook less secure.

Let’s be absolutely clear about this: All of the above options make a Chromebook less secure (but so does you connecting it to the internet in the first place). So, the real question becomes

How and when would you like your Chromebook to be hacked?!

It’s just that option 5 seems to be able to prolong the inevitable long enough to strike a decent balance between usability and security.

During the process of installing VS Code on my Chromebook, I found myself looking up several things while working through the problems encountered en route.

In the spirit of modern systems and software architecture, the below is merely the result of carefully sellotaping together readily available components I leveraged on the end-to-end journey to eventually achieve my goal.

As well as a screenshot-bonanza, I have to admit. But then they are so descriptive. Let alone beautiful.

A Fresh Start

The first useful step for every installation journey is to depart from a know location. Hence, I decided to start from a fresh installation of Chrome OS as I didn’t fancy any leftovers from previous (read: failed) experiments (I have to admit that I did try options 2,3, and 4 from the above list before eventually settling for option 5).

The factory reset process is fairly straightforward and well documented (as a lot of things around Chromebooks actually are — thanks, Google!). Once complete, the factory reset Chromebook did eventually greet me with the below screen.

Upgrading Chrome OS

One of the great features of Chromebooks is that they come with automatic and free upgrades for years to come. Running the latest and greatest Chrome OS version not only remediates know security vulnerabilities but also enables new features such as Linux Apps (Beta). And we want both!

Once again, the upgrade process is fairly straightforward and well documented. In short, the process for me was

- Clicking on the time in the bottom right corner opened a panel with several options

- In the panel that opened, selecting the cog symbol opened up the Chromebook’s settings menu in a separate window

- In the Chromebook’s settings menu, choosing the “About Chrome OS” option on the left hand side eventually displayed the below screen

- Clicking on the “Check for Update” button did (and still does) what it says on the tin; if there’s an update available, then the button subsequently allows me to install the new Chrome OS version. As I was already running the latest version of Chrome OS at the time of writing, I received the below screen

Enabling Linux Apps (Beta)

With the latest Chrome OS version installed, it was time to enable Linux Apps (Beta). As you would expect by now, this is again fairly straightforward and well documented. The process for me was (skip ahead to step 3 if you still have the Chromebook’s settings menu open)

- Clicking on the time in the bottom right corner opened a panel with several options

- In the panel that opened, selecting the cog symbol opened up the Chromebook’s settings menu in a separate window

- In the Chromebook’s settings menu, choosing the “Linux (Beta)” option displayed the below screen

- Clicking the “Turn on” button then displayed the below screen

- Clicking on “Next” displayed the below screen where I changed the default username from

dominicdumrauftodominicfor sake of simplicity - Clicking on “Install” then displayed the below screen At this point, it was time to grab a cuppa, while Chrome OS (as of 2020-07-12) was going to

- download the virtual machine

- start the virtual machine

- start the Linux container

- set up the Linux container

- start the Linux container (again)

- At the end of the installation process, I was eventually greeted with a new blank window (remember, my Acer Chromebook is cheap and cheerful) as per below Depending on the speed of your machine, this step might hardly be noticeable for you.

- In my case, the blank screen was thankfully short lived while the new Terminal application was opening up with the resulting below screen

Pinning Terminal to Shelf (Optional)

The Terminal application was automatically launched at that point in time, but re-launching it required digging through some menus. While pinning the Terminal application to the shelf (what’s also known as Dock on macOS) is entirely optional, it does make later access a lot easier.

The process for me was

- Clicking on the circle icon (also referred to as “Launcher”) in the bottom left corner and extending it all the way, by clicking on the topmost chevron symbol (“^”), opened up a panel with the installed applications

- Scrolling down to the “Linux apps” and clicking on the collection opened up an overlay with the Terminal application in it

- Right clicking on the Terminal application opened up another menu as per below

- Clicking on “Pin to shelf” closed the second menu and pinned the Terminal application to the shelf as per below

Downloading the Right VS Code Package

With Linux Apps (Beta) installed and the Terminal application pinned to the shelf for easier access, it was time to start with the actual VS Code installation.

Here, the only tricky bit is to know which Linux distribution to download from the official VS Code release page. While the method of looking up the distribution name seems to differ between Linux distributions, executing

in the Terminal application did eventually reveal that it was in fact a fairly recent DebianBuster installation.

With the Linux distribution name at hand, the process for me was

- Opening Chrome (or any other browser) and surfing to the official VS Code download page

- Clicking on the box with label “

.debDebian, Ubuntu” as in below screen - On the page that opened up, waiting for the automatic download to finish as in below screen

Installing the VS Code Package

With the correct VS Code package downloaded, it was time to finally install it. This boiled down to opening the Chrome OS Files app and installing the package via the right-click menu item “Install with Linux (Beta)”.

The process for me was

- Clicking on the Launcher icon in the bottom left corner opened up a panel

- Typing in “Files” revealed the Chrome OS Files app as in below screen

- Clicking on the “Files” app launched it

- In the “Files” app, clicking on the “Downloads” folder displayed its contents

- Right-clicking on the

.debpackage downloaded in the previous section opened up the file-specific menu as in below screen - Clicking on “Install with Linux (Beta)” brought up the “Install app with Linux (Beta)” dialogue as in below screen

- Clicking on “Install” started the installation with the Linux installer reporting progress as in below screen

- Waiting until the Linux installer eventually reported success as in below screen

- Clicking “OK” on the remaining “Install app with Linux (Beta)” dialogue as in below screen closed it

- (Optional cleanup) Right-clicking on the

.debpackage opened up the file-specific menu again as in below screen - (Optional cleanup) Clicking on “Delete” removed the item from the “Downloads” folder

Launching VS Code

Once the above steps had successfully completed, VS Code was finally installed on my Chromebook. Now, there are several methods of actually launching it.

Regardless of the option, bare in mind that VS Code is running in the Linux Apps (Beta) VM which may need to be started first and could hence slightly delay things.

From the Terminal Application

VS Code can be launched from the Terminal via

and will eventually open in its separate window.

From the Shelf

An easier way of launching VS Code is from the shelf. Similar to pinning the Terminal application to the shelf, the process for me was

- Clicking on the Launcher icon in the bottom left corner and extending it all the way, by clicking on the topmost chevron symbol (“^”), opened up a panel with the installed applications

- Scrolling down to the “Linux apps” and clicking on the collection opened up an overlay with the Terminal application and VS Code application in it

- Right clicking on the VS Code application opened up another menu as per below

- Clicking on “Pin to shelf” closed the second menu and pinned the VS Code application to the shelf as per below

Regardless of launching VS Code via the Terminal or via the shelf, the end result for me is my currently favourite editor on a cheap and cheerful Acer Chromebook. Plus all the right tools to get the job done!

Visual Studio Code 1 40 20 Full

And best of all, VS Code also has full screen integration which makes it great for distraction free writing as per below screen

At the end, the shiny home screen of my Chromebook now has a full Terminal application. And there’s VS Code. Ready to get cracking.

Well, after transferring all necessary secrets and settings to the Chromebook over HTTP using nothing more than on-board tools from both my Mac and my Chromebook. A sort of poor man’s HTTPS, when there’s no real HTTPS available.

Time will tell if my cheap and cheerful Acer Chromebook turns out to be the blogging machine I hoped it would be. But at least now, it would not have been the tools standing in the way of its success.

While the above Worx for Me!™ when it comes to installing VS Code on a cheap and cheerful Acer Chromebook, you may have an alternative or better way.

Think this is all rubbish, massively overrated, or heading into the absolutely wrong direction in general?!Feel free to reach out to me on LinkedIn and teach me something new!

As always, prove me wrong and I’ll buy you a pint!

Subscribe to How Hard Can It Be?!

Get the latest posts by following our LinkedIn page